Undersampling Method for Pattern Recognition in Non-stationary Environment

Published:

This is the first project conducted during undergraduate years. I invited four classmates to apply research fund from “National university independent research project for undergraduate students”. We worked on pattern recognition problem in condition that data distribution is neither evenly distributed nor static. The research is supervised by Prof.W.W.Y NG. His research covers optimization of neural network and classification problems in machine learning. The findings are summarized in the conference paper “Effects of Different Base Classifiers to Learn++ Family Algorithms for Concept Drifting and Imbalanced Pattern Classification Problems”.

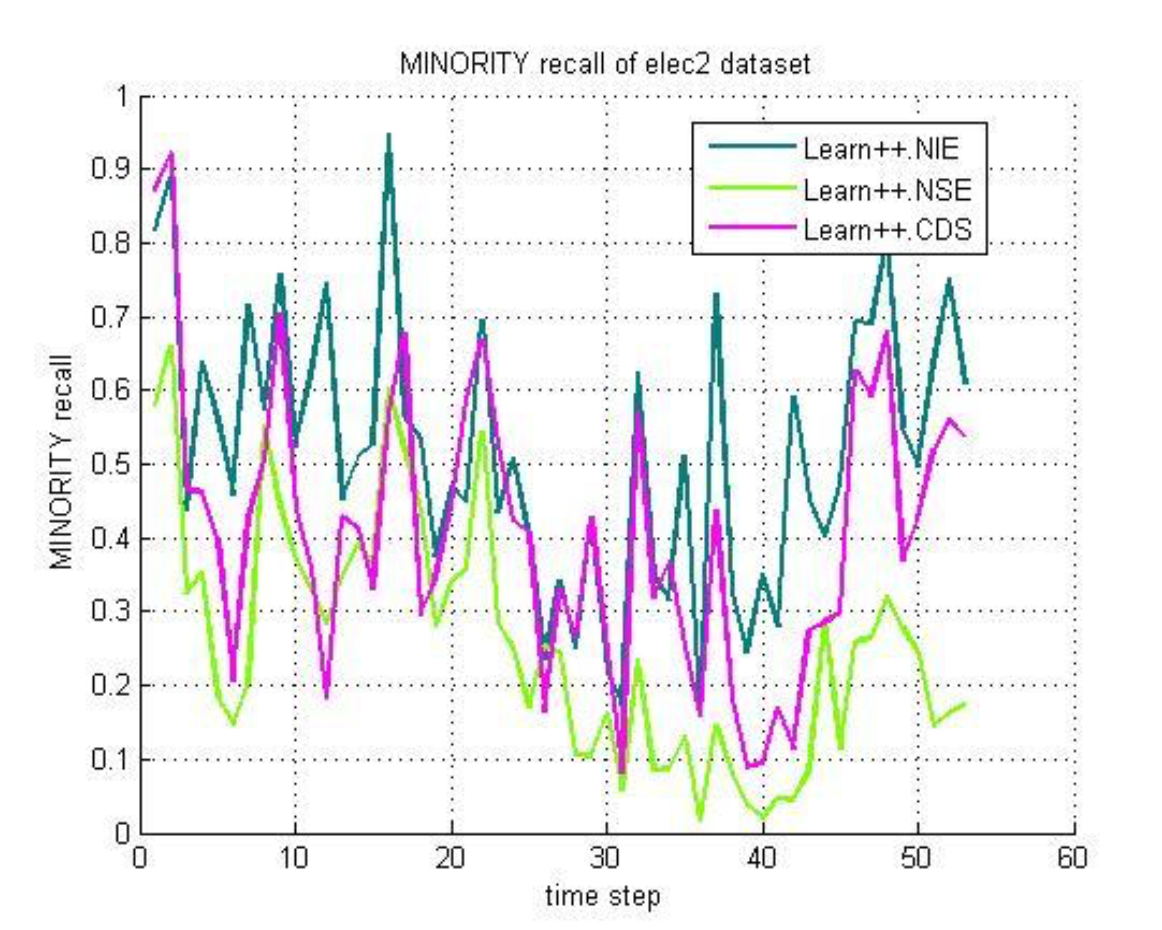

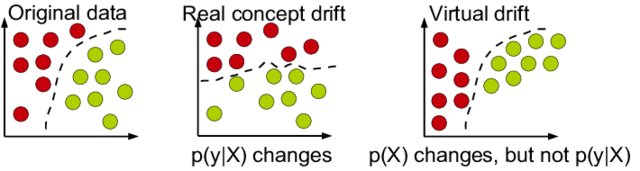

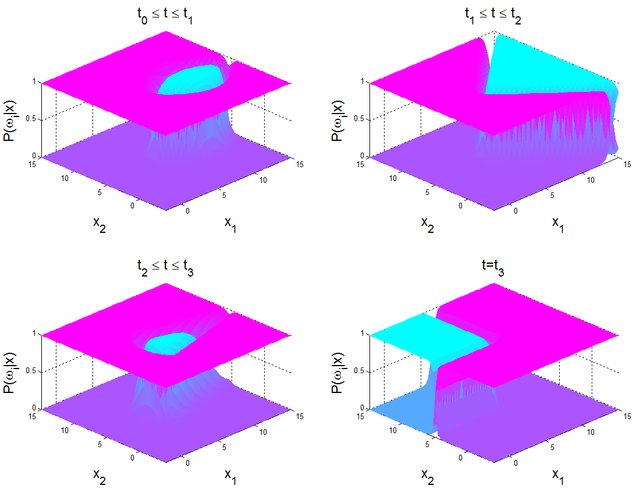

We focus on Learn++ family algorithms that can effectively deal with dynamic data distribution. The changes of class distribution in temporal data are referred as “Concept Drift”, which demands adaptive algorithms to help the classifer update knowledge about decision boudnary to avoid performance decay. Detecting if a real concept drift happens is a challenge.

Source: Gama, J., Žliobaitė, I., Bifet, A., Pechenizkiy, M., & Bouchachia, A. (2014). A survey on concept drift adaptation. ACM computing surveys (CSUR), 46(4), 44.</sub>

Meanwhile, practical class distribution is likely to be imbalanced. Take binary classification as example, samples of majority class are overwhelming to samples of minority class. Imbalanced data brings more challenges to temporal data classification because majority class and minority class may exchange each other. Techniques to overcome data imbalance should take dynamics of majority/minority, and include a special case when the whole dataset reach equilibrium where minimal involvements of these techniques are required.

Source: Ditzler, G., & Polikar, R. (2010). An ensemble based incremental learning framework for concept drift and class imbalance. In The 2010 International Joint Conference on Neural Networks (IJCNN) (pp. 1-8). IEEE.</sub>

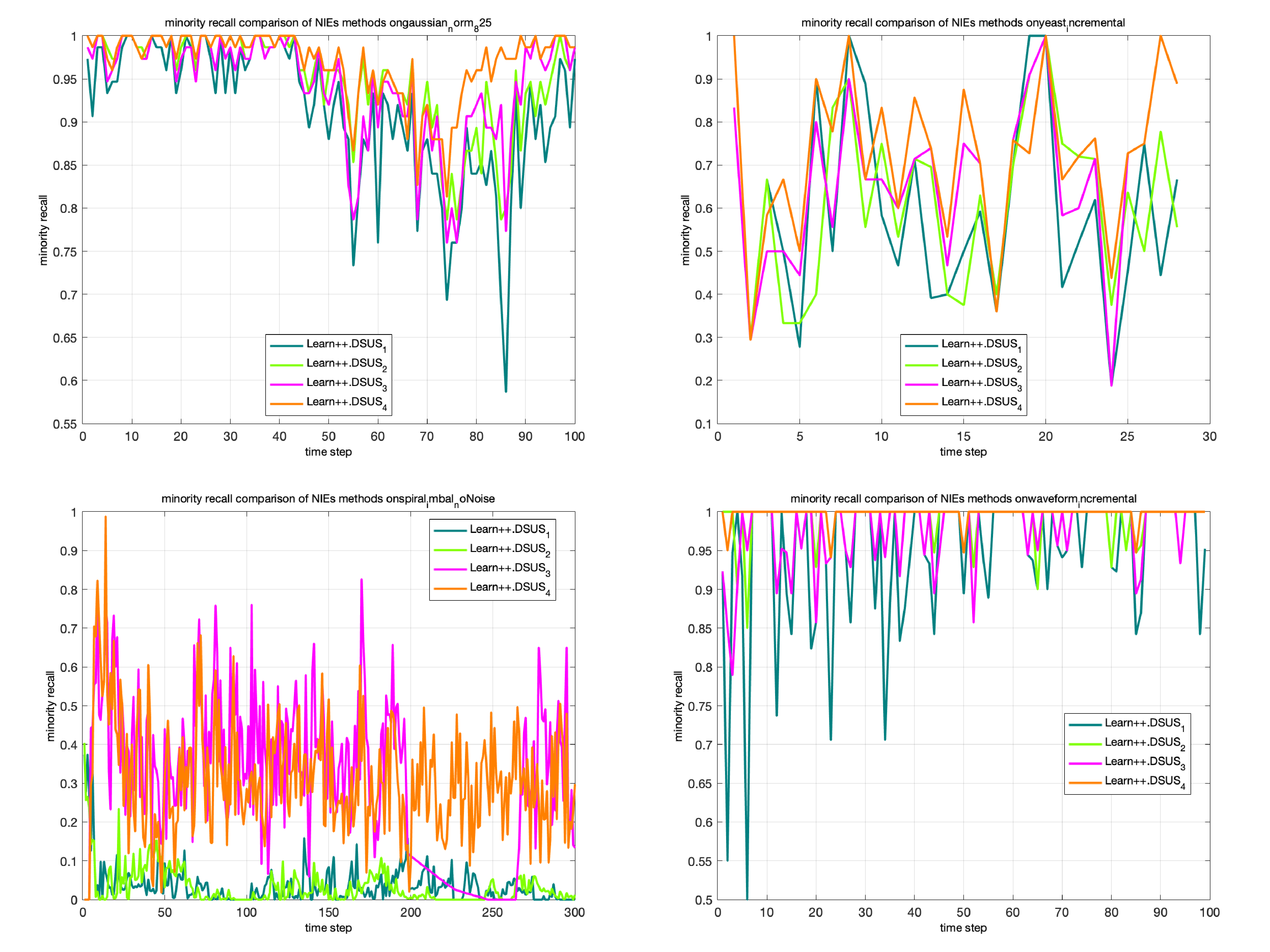

We examine performance of five classifiers as base classifiers of three Learn++ algorithms under difference concept drifts. We incorporate with Diversified Sensitivity Undersampling with the best Learn++ model robost to concept drifting. A new bonus technique is introduced to imporve classifier accuracy under both changes in concepts and imbalance of data. We experiment model variants on five concept drifting datasets and report results and discussions in dynamic and imbalanced classification.